[00:00] (0.00s)

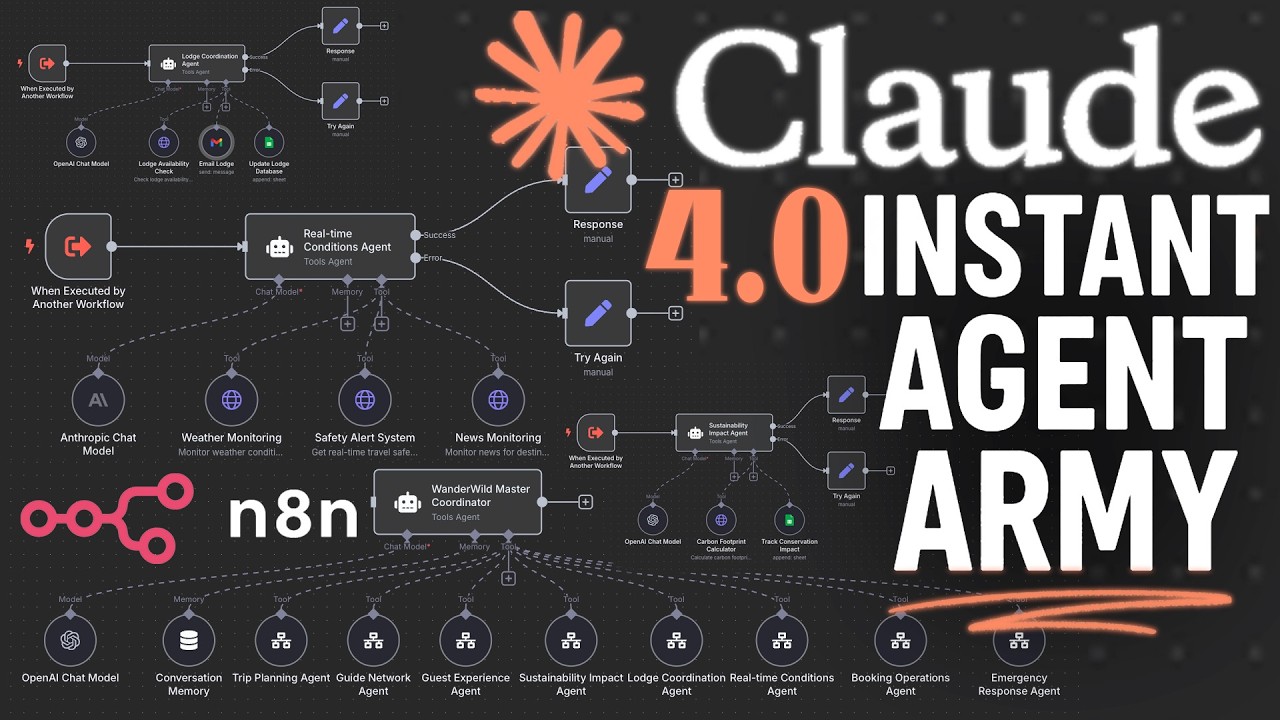

Imagine building an entire army of

[00:02] (2.56s)

agents from just one prompt. In this

[00:04] (4.80s)

video, I'm going to show you exactly how

[00:06] (6.56s)

to use the new Cloud 4 Opus to instantly

[00:09] (9.60s)

generate an entire set of workflows.

[00:11] (11.76s)

You'll see firsthand how easy it is to

[00:13] (13.92s)

spin up a master orchestrating agent,

[00:16] (16.64s)

create specialized subworkflows that

[00:18] (18.64s)

report to that agent, and lastly, adding

[00:21] (21.36s)

tools dynamically to those sub agents

[00:24] (24.08s)

without you writing a line of code

[00:25] (25.68s)

yourself. And here's the craziest part.

[00:27] (27.92s)

This entire process will only take

[00:30] (30.08s)

minutes from start to finish. I'm going

[00:32] (32.16s)

to walk you through step by step on the

[00:34] (34.08s)

fastest way to build sophisticated agent

[00:36] (36.48s)

systems, even if you're completely new

[00:38] (38.56s)

to automation. Let's dive in. All right,

[00:40] (40.40s)

so we're going to tackle two different

[00:41] (41.76s)

ways to assemble your agent army. Both

[00:44] (44.64s)

involve only one prompt each, but one of

[00:47] (47.12s)

them is going to use a cloud project,

[00:48] (48.48s)

and one of them we're going to just send

[00:49] (49.92s)

a straight chat message. And before I

[00:52] (52.16s)

show you how this prompt works and how

[00:53] (53.84s)

this entire system works, let's just

[00:55] (55.76s)

prove that it does work. Now if you send

[00:58] (58.16s)

this entire prompt we have here along

[01:00] (60.96s)

with a series of files these are all

[01:03] (63.84s)

JSON files with one master agent right

[01:06] (66.40s)

here called retrofure master assistant

[01:09] (69.28s)

then you have all these subworkflows

[01:11] (71.68s)

that report to this orchestrating agent.

[01:14] (74.64s)

So pretty much we're using Cloud4 opus

[01:16] (76.88s)

and the power of extended thinking and

[01:19] (79.76s)

web search to be able to look at these

[01:22] (82.16s)

files, get a handle for how to create

[01:24] (84.64s)

the AI agent module, how they connect

[01:26] (86.80s)

together, what kind of tools can be

[01:28] (88.72s)

attached, and if we need to supplement

[01:31] (91.04s)

this information with additional

[01:33] (93.12s)

information not in Claude's training, we

[01:35] (95.20s)

can use web search, which is a newer

[01:37] (97.20s)

feature in the past couple months. And

[01:38] (98.96s)

if we're using Opus, all we have to do

[01:40] (100.80s)

is wait around five to 10 minutes for it

[01:43] (103.28s)

to run through this entire workflow. And

[01:45] (105.44s)

you'll see it first drafts multiple sets

[01:48] (108.40s)

of agents that it could put together.

[01:50] (110.64s)

And then if I ask it for just a sample

[01:52] (112.48s)

of three of these agents and after 5 to

[01:54] (114.88s)

10 minutes, Claude 4 Opus uses all its

[01:57] (117.52s)

tools at its disposal to come up with

[01:59] (119.92s)

not just a draft of different agents,

[02:02] (122.40s)

but it will create a draft of the first

[02:05] (125.28s)

three agents. And if you want, you can

[02:06] (126.88s)

keep going and say create the rest. And

[02:09] (129.20s)

the result is you get a series of JSON

[02:11] (131.68s)

files like this one. And you can click

[02:13] (133.92s)

on the little drop down here. You'll see

[02:15] (135.44s)

we've put together all of these

[02:17] (137.12s)

different agents with one single prompt.

[02:19] (139.84s)

And then we can just go here, copy, and

[02:23] (143.28s)

seamlessly go into NANE, paste this, and

[02:26] (146.80s)

now you have an agent that isn't just an

[02:29] (149.52s)

empty agent. You can double click and

[02:31] (151.44s)

you'll see that an entire prompt on how

[02:34] (154.00s)

the agent works and how it should use

[02:36] (156.32s)

all of its sub tools is set up for you

[02:38] (158.88s)

literally in minutes and ready to go.

[02:41] (161.36s)

And if you click out, you'll see all the

[02:43] (163.12s)

different sub workflows that it's come

[02:44] (164.72s)

up with that it thinks would be suitable

[02:46] (166.96s)

for this kind of business or this kind

[02:49] (169.20s)

of task they're trying to accomplish.

[02:51] (171.04s)

But wait, there's more. We don't just

[02:53] (173.60s)

create these subworkflow drafts. It

[02:55] (175.92s)

actually can create the subworkflows

[02:57] (177.92s)

themselves. So if we click from tab to

[02:59] (179.92s)

tab here, this is the first workflow put

[03:02] (182.72s)

together which is called the

[03:04] (184.08s)

sustainability impact agent where it has

[03:06] (186.64s)

access to different tools that it has

[03:08] (188.96s)

decided make the most sense given this

[03:11] (191.52s)

agents ambitions. And once again all of

[03:14] (194.24s)

these sub agents have instructions of

[03:16] (196.24s)

their own with a reference material on

[03:18] (198.24s)

how to call their subtools as well. So

[03:21] (201.12s)

now we basically are creating agent

[03:22] (202.88s)

section where each agent has another

[03:25] (205.04s)

agent and both of them have instructions

[03:27] (207.12s)

on how they should operate. If we keep

[03:28] (208.72s)

going, you'll see all the different

[03:30] (210.00s)

agents here from the lodge coordination

[03:32] (212.48s)

agent to the real-time conditions agent,

[03:35] (215.12s)

each with not only its own set of tools,

[03:37] (217.68s)

but it's smart enough to decide whether

[03:39] (219.20s)

or not it should use maybe chat GBT

[03:41] (221.52s)

OpenAI or anthropics model for the given

[03:44] (224.64s)

task as well. So, we have that dynamic

[03:46] (226.80s)

nature there. And then you have your

[03:49] (229.12s)

entire set of agents with different

[03:51] (231.28s)

tools with different functionalities and

[03:53] (233.04s)

ambitions all reporting to the central

[03:55] (235.68s)

agent itself. Now, before we get into

[03:57] (237.28s)

the nitty-gritty of the prompt itself, I

[03:59] (239.76s)

want to walk you through the logic of

[04:01] (241.60s)

how this works and why this works. So,

[04:03] (243.76s)

not too long ago, we were all blessed

[04:05] (245.52s)

with yet another model. In this case, we

[04:07] (247.68s)

got the Cloud 4 model where we have

[04:09] (249.68s)

Claude 4 sonnet and Cloud4 opus. Not

[04:12] (252.64s)

only that, when you combine Claude 4

[04:15] (255.04s)

with extended thinking, a feature

[04:17] (257.20s)

available to all, as well as web search,

[04:19] (259.76s)

something also newly available in the

[04:21] (261.44s)

past month or so, it becomes a trifecta.

[04:24] (264.48s)

this perfect marriage of intelligence

[04:27] (267.52s)

with the ability to search as well as an

[04:30] (270.00s)

extension of reflection over time. If

[04:31] (271.76s)

you've watched my past video, you

[04:33] (273.52s)

already know that it's possible to

[04:35] (275.04s)

create out of the box and end workflows

[04:37] (277.76s)

just using claude. And previously, I had

[04:40] (280.08s)

to supplement it with a cheat sheet, a

[04:42] (282.08s)

series of nodes for it to understand.

[04:43] (283.84s)

But now that we have web search at our

[04:45] (285.52s)

disposal and extended thinking together,

[04:48] (288.08s)

we can not only use the power of claude

[04:50] (290.48s)

that natively is decent at any

[04:52] (292.56s)

workflows, but now we can really

[04:54] (294.24s)

supercharge it with examples of these

[04:56] (296.72s)

agents and just allow it to do a monkey

[04:58] (298.96s)

see monkey do understand the structure,

[05:02] (302.08s)

understand how these tools are attached

[05:04] (304.16s)

and understand the relationship of

[05:06] (306.16s)

subworkflows to different types of

[05:08] (308.08s)

agents. Now the most central concept

[05:10] (310.32s)

here is the AI agent module in NAIDEN.

[05:13] (313.68s)

And this module is based on something

[05:15] (315.36s)

called Langchain which is a framework

[05:17] (317.68s)

that really changed the entire course of

[05:19] (319.68s)

the NAN community where now you have

[05:22] (322.00s)

some central agent that takes in a

[05:23] (323.52s)

prompt that can speak to different

[05:25] (325.44s)

tools, can use a language model and can

[05:28] (328.00s)

use an internal memory. And overall,

[05:30] (330.08s)

NADN, like every other automation tool,

[05:32] (332.72s)

uses JSON, which stands for JavaScript

[05:35] (335.36s)

object notation to denote and be able to

[05:38] (338.56s)

bring these workflows to life the way

[05:40] (340.24s)

you see them visually. The fact that

[05:41] (341.76s)

they're all based on JSON, allows us to

[05:44] (344.32s)

manipulate and generate these JSONs

[05:47] (347.04s)

using a language model like Cloudfar

[05:48] (348.96s)

Opus to be able to basically be able to

[05:50] (350.96s)

create the JSON itself, the entire

[05:52] (352.72s)

schema, and import it into any. But

[05:55] (355.60s)

unlike typical workflows they would have

[05:57] (357.68s)

seen my tutorial and probably tons of

[05:59] (359.68s)

other tutorials coming out on this AI

[06:02] (362.00s)

agent tool is special not just in the

[06:04] (364.64s)

sense of what it can do but how it

[06:06] (366.64s)

operates. So if we take a look at the

[06:08] (368.40s)

tools here you can't just use any tool

[06:11] (371.60s)

from a specific provider. There are

[06:13] (373.76s)

different functionalities or methods

[06:15] (375.92s)

available to the AI agent that aren't

[06:18] (378.48s)

necessarily the only functionalities you

[06:20] (380.16s)

can have. For example, you might have a

[06:21] (381.84s)

node that allows you to watch new rows

[06:24] (384.40s)

being added to a Google sheet, right?

[06:26] (386.32s)

And every time you watch a new row come

[06:28] (388.48s)

in, that triggers an entire workflow.

[06:31] (391.12s)

Technically, with an AI agent, they

[06:32] (392.96s)

wouldn't really play very well. An AI

[06:34] (394.96s)

agent would want some form of very

[06:37] (397.04s)

specific action to occur that's

[06:39] (399.44s)

triggered externally from the agent

[06:40] (400.88s)

itself. So taking the Google Sheets

[06:42] (402.56s)

example, it would be able to add a new

[06:44] (404.88s)

row, receive new rows, search new rows,

[06:47] (407.52s)

something that's very functional and

[06:48] (408.96s)

isn't necessarily triggerbased.

[06:52] (412.16s)

So, keeping that in mind, if you were

[06:53] (413.92s)

just to ask Claude out of the box, build

[06:56] (416.32s)

me an AI agent workflow, you could get

[06:58] (418.80s)

some results that are decent, but it

[07:00] (420.72s)

will basically struggle with

[07:02] (422.44s)

understanding what tools it can use, but

[07:05] (425.04s)

most likely it will struggle to

[07:06] (426.48s)

delineate between what tools can it use,

[07:09] (429.36s)

what are the nodes that I can write JSON

[07:11] (431.44s)

for to visualize those tools, and most

[07:14] (434.08s)

importantly, what are the different

[07:15] (435.60s)

tiers of methods that I can have access

[07:17] (437.60s)

to as an AI agent, which is different

[07:20] (440.32s)

from a standard workflow you put

[07:21] (441.92s)

together and edit it in. And I'm not

[07:23] (443.04s)

spoiling the rest of the video by

[07:24] (444.32s)

telling you that the crux of being able

[07:26] (446.56s)

to do this entire process relies on the

[07:29] (449.28s)

ability to create these tools reliably

[07:31] (451.84s)

in the exact way that the AI agent node

[07:34] (454.80s)

expects. So the overall goal is that

[07:36] (456.96s)

we're able to create a series of JSONs.

[07:39] (459.76s)

One that acts as our orchestrator and

[07:42] (462.40s)

the others that act as our sub aents.

[07:44] (464.88s)

All of which ideally don't have their

[07:46] (466.72s)

own subworkflows because then you'll

[07:48] (468.48s)

have agents with subworkflows with

[07:50] (470.56s)

subworkflows and this entire chain can

[07:52] (472.80s)

keep going on. Now you can totally do

[07:54] (474.64s)

that if you wish but for simplicity sake

[07:57] (477.12s)

I ideally wanted to just go from

[07:59] (479.52s)

orchestrating agent to sub workflows

[08:01] (481.68s)

that all have tools. So that's the

[08:03] (483.36s)

general structure that we're going for

[08:04] (484.96s)

at least with our approach. So now that

[08:06] (486.72s)

we have that background, we're safe to

[08:08] (488.64s)

dive straight into this prompt. And just

[08:10] (490.72s)

for the pure comprehension of every

[08:12] (492.64s)

part, I will read through it and

[08:14] (494.40s)

basically give a voice over for the

[08:16] (496.16s)

parts you should really care about.

[08:17] (497.44s)

Okay, so let's give it a read. You are

[08:19] (499.76s)

an expert NAND workflow architect and

[08:22] (502.32s)

systems designer. Your primary mission

[08:25] (505.12s)

is to generate a comprehensive,

[08:27] (507.12s)

functional and importable NAN AI agent

[08:30] (510.32s)

system based on the provided business

[08:32] (512.76s)

description strictly emulating the

[08:35] (515.12s)

structural patterns, node types and

[08:36] (516.88s)

connection methods. So in this case, I'm

[08:38] (518.64s)

just giving it a series of examples

[08:40] (520.40s)

here. So especially for the AI agent

[08:42] (522.40s)

nodes and their tools via AI tool. So

[08:45] (525.52s)

this here is a part of the underlying

[08:47] (527.60s)

JSON that basically denotes to the agent

[08:50] (530.40s)

what is attached to that agent and

[08:52] (532.16s)

that's where the attachment of the tools

[08:54] (534.08s)

comes into play. We then say your

[08:55] (535.68s)

paramount goals are to ensure all

[08:57] (537.28s)

generated N&N workflow JSON is 100%

[09:00] (540.16s)

valid meaning it's not corrupt,

[09:01] (541.84s)

importable and entirely free of property

[09:04] (544.48s)

value errors. Now what are property

[09:06] (546.48s)

value errors? These errors pop up quite

[09:08] (548.48s)

a bit when the JSON is generated by some

[09:10] (550.80s)

form of language model, but it's missing

[09:12] (552.72s)

key parameters or key components that

[09:14] (554.88s)

any is expecting because it's expecting

[09:17] (557.20s)

those and it needs those to be able to

[09:18] (558.80s)

visualize it the way you see it on a

[09:20] (560.56s)

screen. It's not able to actually import

[09:22] (562.56s)

it. So, I'm trying to have it reflect

[09:24] (564.80s)

using that extended thinking function

[09:26] (566.72s)

and make sure that before we import it

[09:28] (568.80s)

into NADN, there's a very high

[09:30] (570.64s)

likelihood that it's going to actually

[09:32] (572.40s)

work. Next, I instructed that there's

[09:33] (573.92s)

going to be two distinct stages. First,

[09:36] (576.24s)

after analyzing the business description

[09:38] (578.08s)

provided at the end of this message, you

[09:40] (580.40s)

must conceptualize and list directly in

[09:42] (582.48s)

the chat six to eight potential

[09:44] (584.32s)

specialized AI agent names. So, in this

[09:46] (586.40s)

case, I'm saying I want you to come up

[09:48] (588.32s)

with six to eight ideas. Brainstorm on

[09:51] (591.20s)

the types of agents we want to create.

[09:53] (593.04s)

This gives us a baseline to actually

[09:54] (594.64s)

work from. Now the next part is for each

[09:57] (597.04s)

of the conceptual agents provide a

[09:58] (598.80s)

concise

[10:03] (603.96s)

one-sensit nodes or verifiable public

[10:06] (606.60s)

APIs that your web search for tools not

[10:09] (609.44s)

covered in provided examples indicates

[10:11] (611.44s)

would be the most appropriate for these

[10:12] (612.88s)

tasks. Do not proceed with any

[10:14] (614.88s)

unverified or hallucinated tools or

[10:17] (617.04s)

APIs. Now what is this last part about

[10:19] (619.52s)

here? Hallucinated tools or APIs. Once

[10:22] (622.24s)

in a while, even using Opus, it will

[10:24] (624.64s)

create a tool that is a fictional

[10:27] (627.44s)

non-existent API specific to company X.

[10:31] (631.04s)

So imagine you said company X has these

[10:33] (633.12s)

services, they have this stack. It might

[10:35] (635.36s)

accidentally create an HTTP request,

[10:37] (637.52s)

which is a request to an API and call it

[10:41] (641.56s)

company.x.api and basically make it out

[10:43] (643.76s)

of thin air, which is not what we want.

[10:45] (645.84s)

We want our tools to have a high

[10:47] (647.28s)

likelihood of being grounded and being

[10:49] (649.84s)

actually functional. And from these six

[10:51] (651.68s)

to eight ideas we come up with, we

[10:53] (653.04s)

actually just want to start with

[10:54] (654.56s)

creating three of the most impactful of

[10:57] (657.04s)

these workflows. Now, there's two

[10:58] (658.64s)

different reasons why I'm saying three

[10:59] (659.84s)

here. First of all, if you let it create

[11:02] (662.16s)

six to eight workflows in one shot and

[11:04] (664.64s)

you're just on the Claude Pro plan using

[11:07] (667.12s)

Cloud Opus and extended thinking, you

[11:09] (669.28s)

might completely use all your credits in

[11:12] (672.16s)

one shot. So when I say three, it just

[11:14] (674.88s)

gives you the ability to quickly audit

[11:16] (676.88s)

whether or not it's working, whether or

[11:18] (678.64s)

not it's adding the tools you'd expect

[11:20] (680.64s)

before you commit and donate all your

[11:23] (683.12s)

credits for the next 6 to 7 hours to

[11:25] (685.44s)

Anthropic. And the second reason is

[11:27] (687.04s)

obviously time because this will take at

[11:28] (688.56s)

least 5 to 10 minutes to put together

[11:30] (690.56s)

and you don't want to wait half an hour

[11:32] (692.32s)

all to find out that seven of your

[11:34] (694.32s)

workflows are completely not usable.

[11:36] (696.00s)

Now, this second stage is completely

[11:38] (698.00s)

optional. And if you want to move ahead

[11:40] (700.24s)

and complete the remaining of the

[11:42] (702.08s)

initial draft of agents they came up

[11:43] (703.68s)

with, then you can just say, "Cool, you

[11:45] (705.52s)

did a great job. Let's finish off with

[11:47] (707.36s)

the rest of the agents." And then with

[11:48] (708.88s)

this instruction, it should know exactly

[11:50] (710.64s)

what it next step should be. Now, if we

[11:52] (712.64s)

scroll down, I want to focus on this

[11:54] (714.16s)

specific instruction here that says,

[11:56] (716.08s)

"These specialized agents should utilize

[11:58] (718.72s)

two to three with an absolute maximum of

[12:01] (721.52s)

five, if genuinely distinct, critical,

[12:04] (724.40s)

and verifiable, real tools, and must

[12:07] (727.12s)

have correctly connected response and

[12:09] (729.20s)

try again set nodes wired to the

[12:11] (731.28s)

respective AI agent node success and

[12:13] (733.60s)

error outputs." And what does that mean

[12:14] (734.80s)

in plain English? If we pop over to the

[12:17] (737.20s)

second tab here, all we're asking is

[12:19] (739.12s)

that whatever tools you choose, make

[12:21] (741.12s)

sure they're legit tools. They're not

[12:22] (742.64s)

made up. And number two, connect a set

[12:26] (746.16s)

response and a try again step for the AI

[12:29] (749.36s)

agent in case something goes wrong, it

[12:31] (751.36s)

can try again in case there's some form

[12:32] (752.80s)

of temporary error. And with that, if we

[12:35] (755.36s)

go back, we just finish off by adding

[12:38] (758.56s)

the business description of the

[12:40] (760.32s)

underlying business. And this is what

[12:41] (761.92s)

makes this so powerful that you can use

[12:44] (764.00s)

this entire prompt and you just change

[12:45] (765.76s)

the very bottom. And the reason why I

[12:47] (767.68s)

added this business at the very bottom

[12:49] (769.20s)

is when it comes to prompt engineering,

[12:51] (771.12s)

at least for now, a prompt will

[12:53] (773.12s)

typically be paid attention to at the

[12:55] (775.36s)

very beginning and the very end of the

[12:57] (777.12s)

prompt. So we want to make sure that the

[12:59] (779.12s)

business and the underlying mechanisms

[13:01] (781.20s)

of that business are really paid

[13:02] (782.96s)

attention to by the language model. In

[13:04] (784.80s)

this case, I won't read all of this, but

[13:06] (786.48s)

pretty much it goes through this fake

[13:08] (788.24s)

business that I came up with that has a

[13:10] (790.32s)

series of different operations, and

[13:12] (792.64s)

we're just trying to find ways to

[13:14] (794.00s)

optimize for those operations. Now,

[13:15] (795.60s)

where this becomes super exciting is

[13:17] (797.44s)

when we add specifications for what kind

[13:19] (799.60s)

of tools are using, and we find a way to

[13:22] (802.24s)

create a cloud project to make a much

[13:24] (804.40s)

more sophisticated version of this

[13:26] (806.32s)

prompt. But just in case you missed

[13:27] (807.68s)

something on this prompt, I'll be making

[13:29] (809.60s)

this available in the description below

[13:31] (811.52s)

so you can go through it, change it, and

[13:33] (813.60s)

do whatever you want to your heart's

[13:34] (814.80s)

content to optimize it for your oneshot

[13:37] (817.20s)

workflow. Now, for our next three

[13:38] (818.96s)

samples we're going to take a look at,

[13:40] (820.48s)

we're going to analyze three completely

[13:42] (822.64s)

hypothetical businesses. One is called

[13:45] (825.12s)

Flexiflow Studios. That's a Tik Tok

[13:47] (827.68s)

agency. We're going to look at a dessert

[13:49] (829.84s)

place called Unicorn Milkshake. And then

[13:52] (832.32s)

we're going to look at Chaos Coffee.

[13:54] (834.48s)

Each of them uses different tools but

[13:57] (837.28s)

they have some similarities. So

[13:59] (839.20s)

Flexiflow uses things like ClickUp, Air

[14:01] (841.76s)

Table and Slack and Google. And then

[14:04] (844.56s)

Unicorn Milkshake uses Zoom as well as

[14:07] (847.60s)

those tools as well and monday.com. And

[14:10] (850.08s)

then Chaos Coffee uses a mixture of what

[14:12] (852.72s)

both of these use. Now this is a

[14:14] (854.48s)

purposeful example because of the big

[14:16] (856.32s)

trick and the big nugget I'm about to

[14:18] (858.24s)

show you next. If we pop into our Cloud

[14:20] (860.24s)

project, we have quite a few different

[14:22] (862.00s)

things going on here. We have a cheat

[14:24] (864.16s)

sheet guide that we put together. We

[14:26] (866.32s)

also have a special file here called

[14:30] (870.60s)

agents_tools.json. And this is going to

[14:32] (872.16s)

be the golden nugget you're going to

[14:33] (873.44s)

learn from this video. And then we have

[14:35] (875.44s)

just another set of workflows that have

[14:37] (877.76s)

some form of master orchestrating agent

[14:39] (879.68s)

and sub aents. And what I'll do is along

[14:42] (882.24s)

with that prompt I provided you

[14:43] (883.76s)

initially, I'll also provide you with a

[14:45] (885.76s)

series of files that you can use as well

[14:47] (887.68s)

to add to a project or use in a prompt.

[14:50] (890.08s)

So you can use this as well without

[14:51] (891.36s)

having to build that initial workflow

[14:53] (893.12s)

yourself. Now for this prompt, it took

[14:54] (894.88s)

so much time that I refused for it to be

[14:56] (896.88s)

copycatted all over YouTube. So this

[14:59] (899.28s)

prompt will be available to my early AI

[15:01] (901.36s)

adopters community members exclusively

[15:03] (903.28s)

in the community. But for the rest, I

[15:05] (905.52s)

will walk you through how this agent

[15:07] (907.84s)

tools file works because this will open

[15:10] (910.48s)

so many doors for you. If we go into

[15:12] (912.24s)

this agent tools aen, you'll think that

[15:14] (914.80s)

I'm a madman for putting one AI agent

[15:18] (918.80s)

with multiple many tools. Now, do I

[15:22] (922.72s)

intend on ever running this workflow?

[15:25] (925.28s)

No. What I'm doing is a bit of a cheat

[15:27] (927.36s)

code. If you remember before, if you go

[15:30] (930.00s)

to something like, let's say, Asana,

[15:32] (932.40s)

which is a project management tool, and

[15:34] (934.48s)

you go under

[15:35] (935.72s)

options, while you can use all of these

[15:37] (937.92s)

in any, the AI agent module, like I said

[15:41] (941.04s)

before, can't necessarily use all of

[15:42] (942.96s)

these tools. It can use a subset of

[15:45] (945.36s)

these different methods. So, if I had

[15:47] (947.52s)

some form of trigger action, let's go

[15:49] (949.44s)

here on a new asana event. Let's just

[15:51] (951.92s)

bring this to the board because this is

[15:53] (953.12s)

the easiest way for you to understand

[15:55] (955.28s)

what's happening. I physically can't

[15:57] (957.60s)

connect this as a tool. It will not

[15:59] (959.60s)

accept it because this is a trigger.

[16:01] (961.52s)

It's not something that the agent module

[16:03] (963.12s)

can actually play nice with. Which is

[16:05] (965.44s)

why you'll see that when you add a tool

[16:08] (968.32s)

to the agent module and you click on

[16:10] (970.64s)

asauna, we won't have as many options as

[16:14] (974.00s)

we saw before. I think we had 22 options

[16:16] (976.80s)

before, but now we can only do these

[16:19] (979.44s)

operations using the agent module, which

[16:22] (982.08s)

is where this complexity comes in.

[16:23] (983.84s)

that's made me spend hours trying to

[16:26] (986.32s)

figure this out. And knowing that a lot

[16:28] (988.08s)

of these different services like Zoho,

[16:30] (990.32s)

like Monday, like ClickUp, which are

[16:32] (992.72s)

actual services that most businesses

[16:34] (994.32s)

use, not all businesses use Air Table

[16:36] (996.56s)

and not all businesses use Google

[16:38] (998.08s)

Sheets. So what happens if you have

[16:39] (999.76s)

these kinds of tools in your toolbox?

[16:42] (1002.88s)

Well, if we can't use web search

[16:46] (1006.00s)

reliably to understand how to attach

[16:48] (1008.40s)

these to the agent, and if we don't have

[16:50] (1010.48s)

a knowledge base we want to constantly

[16:52] (1012.16s)

feed of examples of workflows with these

[16:54] (1014.72s)

exact tools, what we could do is just

[16:57] (1017.28s)

put all of the tools that we care about,

[16:59] (1019.44s)

attach it to one agent, and then

[17:01] (1021.76s)

download that as a JSON, and technically

[17:05] (1025.12s)

we can use that as our mini knowledge

[17:06] (1026.88s)

base now to pseudo fine-tune our agent

[17:10] (1030.16s)

in Claude to understand how to put

[17:12] (1032.48s)

together a Slack connection to an agent.

[17:15] (1035.36s)

How to put together an ASA connection to

[17:17] (1037.36s)

an agent. Same with Monday, same with

[17:19] (1039.20s)

Zoho. So, this becomes your cheat code

[17:21] (1041.52s)

where you can use whatever you want

[17:22] (1042.96s)

depending on your particular business or

[17:25] (1045.28s)

service you're offering. You can add

[17:27] (1047.12s)

whatever node you wish. Let's say a

[17:29] (1049.12s)

quadrant node or let's say an airtop

[17:32] (1052.40s)

tool node. And then you can just hook up

[17:34] (1054.56s)

all the different functionalities you

[17:36] (1056.08s)

think you'd want to use and then use

[17:37] (1057.92s)

that JSON as a part of your knowledge

[17:40] (1060.32s)

base to allow Claw to have a better

[17:42] (1062.64s)

understanding of how to put everything

[17:44] (1064.56s)

together when it comes to the AI agent

[17:46] (1066.24s)

module. Once you have that put together

[17:48] (1068.00s)

along with the cheat sheet, you now have

[17:49] (1069.92s)

something super potent that you only

[17:52] (1072.00s)

have to just provide a description of a

[17:53] (1073.92s)

business as well as the tools used in

[17:55] (1075.84s)

that business and you can crank out

[17:57] (1077.84s)

these workflows fairly reliably over and

[18:00] (1080.08s)

over again. And for our first example,

[18:01] (1081.76s)

we have Flexiflow Studios, which is a

[18:04] (1084.16s)

beautiful name. Now, all we have as an

[18:06] (1086.24s)

instruction is build an agent army for

[18:08] (1088.80s)

this business. We describe the business

[18:11] (1091.04s)

itself and all we do is we just drop in

[18:14] (1094.08s)

the names of the tools. So, we're using

[18:16] (1096.32s)

Zoom, ClickUp, we're using Slack, some

[18:19] (1099.20s)

Google Sheets, some Air Table, and then

[18:21] (1101.44s)

we basically contextualize it in one big

[18:23] (1103.44s)

paragraph. And with our supercharged

[18:25] (1105.76s)

prompt I put together specifically for

[18:27] (1107.76s)

this claude project, this just takes

[18:30] (1110.24s)

this specific snippet and then creates a

[18:33] (1113.60s)

list of hypothetical agents that it

[18:35] (1115.68s)

could put together. And then it creates

[18:37] (1117.44s)

a short list of three agents, a client

[18:40] (1120.56s)

request handler agent, a project setup

[18:43] (1123.12s)

agent, and a team coordination agent.

[18:45] (1125.92s)

And then after some contemplation, it

[18:48] (1128.32s)

puts together the JSON for the master

[18:50] (1130.68s)

coordinator, the request handler and the

[18:53] (1133.76s)

rest. And all you have to do is either

[18:55] (1135.84s)

download the actual text file or you can

[18:59] (1139.20s)

copy it and import it directly into any.

[19:01] (1141.76s)

And what you get is the following where

[19:04] (1144.24s)

you have the Flexiflow master AI

[19:06] (1146.72s)

coordinator with all the subworkflows.

[19:08] (1148.80s)

it's drafted and then you have a draft

[19:11] (1151.04s)

of those subworkflows where you have

[19:12] (1152.88s)

things like Air Table, you have Slack,

[19:16] (1156.00s)

and notice how they're not invalid.

[19:18] (1158.08s)

They're all valid. We now have

[19:19] (1159.96s)

monday.com, we have Slack again, and

[19:22] (1162.80s)

they're not broken because it had that

[19:24] (1164.64s)

additional training data, that cheat

[19:26] (1166.40s)

sheet of the different nodes that it

[19:27] (1167.92s)

could use and repurpose from. And then

[19:30] (1170.40s)

if we take a look at the final one here,

[19:32] (1172.56s)

we now have, you can see here, ClickUp

[19:34] (1174.64s)

as well as Zoom. And all of these are

[19:37] (1177.20s)

logical. So this one, a team

[19:39] (1179.04s)

coordination AI agent has something for

[19:42] (1182.16s)

scheduling Zoom meetings, team

[19:43] (1183.92s)

availability checks by sending messages

[19:46] (1186.00s)

and then creating tasks for that team.

[19:48] (1188.56s)

And if you so wanted to add more tools,

[19:50] (1190.80s)

you could just change the underlying

[19:52] (1192.16s)

prompt and tell it, you know what, draft

[19:54] (1194.40s)

five tools for each thing. Now, as you

[19:56] (1196.56s)

add more tools, you might add some more

[19:58] (1198.88s)

bloat, some unnecessary tooling, but the

[20:01] (1201.52s)

whole point of this exercise is to get

[20:03] (1203.52s)

you started, getting you from zero to

[20:05] (1205.68s)

80%. Is this going to be perfect out of

[20:08] (1208.08s)

the box? Is this going to run on its

[20:09] (1209.76s)

first try? No. But being able to set the

[20:11] (1211.84s)

foundation with this head start will

[20:14] (1214.00s)

help you speed things up and also help

[20:16] (1216.32s)

you brainstorm in a short amount of time

[20:18] (1218.40s)

what could be possible. For the second

[20:20] (1220.16s)

business, we have Pet Pal concurge which

[20:22] (1222.56s)

is the Uber for pet care connecting busy

[20:24] (1224.72s)

pet parents with trusted local sitters.

[20:27] (1227.04s)

In this case, we seem to also be using

[20:28] (1228.80s)

Air Table, Slack, Zoom, um, and in this

[20:32] (1232.32s)

time ASA right here. And then we get the

[20:35] (1235.60s)

following workflows where we have the

[20:37] (1237.44s)

master agent with a series of different

[20:40] (1240.00s)

nodes. We have the emergency care

[20:42] (1242.48s)

coordinator, the provider management

[20:44] (1244.48s)

agent, the booking and scheduling agent,

[20:46] (1246.88s)

and then something like the photo update

[20:48] (1248.72s)

agent. I would imagine the photos of the

[20:50] (1250.48s)

pets themselves, maybe their profiles on

[20:52] (1252.56s)

some form of portal or website. And in

[20:54] (1254.72s)

terms of the subworkflows, we have the

[20:57] (1257.28s)

emergency care AI agent that has access

[20:59] (1259.60s)

to air table to search available

[21:01] (1261.36s)

providers for a given dog's doctors. And

[21:04] (1264.56s)

then we have Slack to alert nearby

[21:06] (1266.80s)

providers. And then for ASA, now we have

[21:09] (1269.60s)

create urgent task if needed. So it's

[21:11] (1271.84s)

trying to come up and rationalize

[21:13] (1273.76s)

through different workflows. And like I

[21:15] (1275.68s)

said before, each one has a starter

[21:18] (1278.00s)

prompt that you can use that's already

[21:19] (1279.68s)

pretty sophisticated out of the box. And

[21:21] (1281.76s)

all you can do is kind of just fine-tune

[21:23] (1283.60s)

it for your specific use case. We also

[21:26] (1286.00s)

have a provider management AI agent that

[21:28] (1288.48s)

uses in this case money.com, airtable

[21:31] (1291.04s)

and Gmail. And then we have one more

[21:33] (1293.28s)

which is the booking scheduling that

[21:34] (1294.88s)

uses a combination of Google Sheets, ASA

[21:37] (1297.60s)

and scheduling a consultation using

[21:39] (1299.36s)

Zoom. So now that we have all the puzzle

[21:41] (1301.20s)

pieces set up, so it can just pick and

[21:43] (1303.60s)

choose these different nodes and we

[21:45] (1305.36s)

don't have to obsess over the

[21:46] (1306.80s)

functionality, the fact that these nodes

[21:48] (1308.88s)

are connecting properly to the agent. It

[21:50] (1310.80s)

can now also focus on the higher level

[21:53] (1313.04s)

business decisions on what is practical.

[21:55] (1315.68s)

What kind of agents make the most sense

[21:57] (1317.36s)

for this kind of business given the

[21:59] (1319.28s)

profile. And last but not least, we have

[22:01] (1321.12s)

Chaos Coffee Co. that runs 15 quirky

[22:04] (1324.48s)

coffee shops known for their organized

[22:06] (1326.60s)

chaos. And in this case, we mention once

[22:09] (1329.84s)

again Google Sheets, Air Table, and

[22:11] (1331.36s)

ClickUp. Obviously, I could have added

[22:12] (1332.88s)

more. I just wanted to be able to use

[22:14] (1334.16s)

that one file for all of these use

[22:15] (1335.76s)

cases. So, just bear with me. And in

[22:18] (1338.24s)

this case, yet again, we're able to

[22:19] (1339.84s)

crank out this operator agent here that

[22:22] (1342.96s)

has its set of instructions. And then we

[22:25] (1345.52s)

have subworkflows like inventory

[22:27] (1347.44s)

ingredient discovery, a recipe

[22:29] (1349.44s)

innovation agent, and a quality control

[22:32] (1352.00s)

agent as well as a financial analytics

[22:34] (1354.48s)

agent as well. So, it's very dynamic to

[22:36] (1356.64s)

the specific business we have. And if we

[22:38] (1358.48s)

pop into the subworkflows, we have an

[22:41] (1361.12s)

interlocation coordinator AI that has

[22:43] (1363.60s)

access to track deliveries via

[22:45] (1365.24s)

monday.com, send coordination alerts in

[22:48] (1368.08s)

Slack, and then create a coordination

[22:49] (1369.92s)

task in ClickUp. We have an inventory

[22:53] (1373.60s)

discovery agent that also has the

[22:55] (1375.60s)

ability to, in this case, also update

[22:57] (1377.28s)

ingredients in the database in Air

[22:59] (1379.04s)

Table, update inventory board in

[23:01] (1381.28s)

money.com, and yet again create a task.

[23:04] (1384.00s)

And last but not least, we have my

[23:05] (1385.76s)

favorite, which is the recipe innovation

[23:07] (1387.92s)

agent that has access to schedule

[23:10] (1390.32s)

tasting sessions with Zoom. Document

[23:12] (1392.64s)

recipes in Google Sheets and announce

[23:14] (1394.64s)

any big recipes to the whole crew. I

[23:17] (1397.20s)

think there's 15 locations in this

[23:18] (1398.56s)

hypothetical company. So, this would be

[23:20] (1400.48s)

the final result here. And then you have

[23:22] (1402.80s)

once again yet another prompt

[23:24] (1404.32s)

orchestrating these agents. You can see

[23:26] (1406.00s)

it's pretty consistent from workflow to

[23:28] (1408.00s)

workflow. And that's pretty much it. So

[23:29] (1409.68s)

hopefully you found this as cool as I

[23:31] (1411.60s)

did building it and this will be

[23:33] (1413.28s)

something useful to you to create your

[23:35] (1415.36s)

own drafts of AI agent networks to get

[23:38] (1418.00s)

you off the ground and get you from 0 to

[23:39] (1419.92s)

80 as quickly as possible. Once again,

[23:42] (1422.00s)

if you want access to the very first

[23:43] (1423.52s)

prompt along with a sample agent network

[23:45] (1425.60s)

that you can use to try to repurpose

[23:47] (1427.60s)

this, then I'll make that available in

[23:49] (1429.28s)

the first link in the description below.

[23:50] (1430.64s)

But if you want access to the

[23:51] (1431.76s)

supercharged prompt along with the

[23:53] (1433.68s)

underlying cheat sheet guide for the

[23:55] (1435.20s)

Claude project, then that will be in my

[23:57] (1437.44s)

community in the second link in the

[23:58] (1438.96s)

description below where you'll have

[24:00] (1440.32s)

access to more mad scientist experiments

[24:02] (1442.40s)

than you can imagine and exclusive

[24:04] (1444.08s)

content that you'll never see on

[24:05] (1445.36s)

YouTube. Enjoy building and I'll see you

[24:07] (1447.04s)

in the next

YouTube Deep Summary

YouTube Deep Summary Extract content that makes a tangible impact on your life